The Illusion of the "Finished" Product

In the traditional software era, "launching" was a destination. You spent six months building, you hit a button, and the product was "done" until the next major version. In the age of Vibe Engineering, launching is merely the starting gun for the most important phase of your journey: Learning.

The psychological trap most founders fall into is the "Arrival Fallacy." They believe that once the code is in production and the Stripe integration is live, the hard work is over. In reality, an AI product is a living organism. Unlike a traditional CRUD (Create, Read, Update, Delete) app where the logic is deterministic, AI is probabilistic. This means that even if you don't change a single line of code, the world around your app is shifting, and the "vibe" of your responses is constantly under threat of degradation.

This post is about the Human-in-the-Loop (HITL) framework—the secret sauce that turns a clever AI wrapper into a living, breathing, and evolving business. We are moving beyond the "set it and forget it" mentality and entering the "continuous refinement" loop.

The Reality of "Vibe Decay"

Before we talk about learning, we have to talk about "Vibe Decay." AI applications are uniquely susceptible to environmental changes that traditional software never had to worry about. If you aren't actively learning, your product is actively dying.

- Model Drift: OpenAI or Anthropic might update their underlying models overnight to improve safety or efficiency. Suddenly, your "professional" sounding AI starts talking like a teenager, or it stops following the specific JSON format your frontend needs. These "silent updates" can break your product without throwing a single error code in your logs.

- User Evolution: As users get more comfortable with AI, their expectations rise. A response that felt "magical" in month one feels "generic" by month three. Users start to recognize GPT-4’s specific rhetorical flourishes (like starting every conclusion with "In summary...") and they begin to crave something more bespoke.

- Knowledge Stale-ness: If your AI is relying on data from last week, but the market moved yesterday, the "vibe" becomes irrelevant. In the AI economy, the value of information has a shorter half-life than ever before.

Learning isn't just about adding features; it’s about defending your product against this decay. It’s about ensuring that the "Delta" between what the user expects and what the AI provides is always shrinking, never expanding.

Prompt Regression Testing: Treating Words Like Code

The biggest mistake a Vibe Engineer can make is treating their prompts as static text. Prompts are the code. They are the logic gates of the 21st century. And just like you wouldn't push a change to a banking backend without testing it, you shouldn't "tweak" a prompt based on a single bad user experience without seeing how that tweak affects the rest of your system.

When you want to change a prompt to fix a specific bug, how do you know you haven't broken ten other things? In traditional coding, this is called "Regression Testing." For a Vibe Engineer, it's about building a Prompt Library and an automated evaluation workflow.

Creating Your "Gold Standard" Dataset

You cannot learn if you don't have a baseline. You need to compile a list of 20–50 "Input/Output" pairs that represent the perfect version of your product. This is your "North Star."

- Input: A complex, multi-layered user request that usually trips up the AI.

- Ideal Output: The "10/10" response that you would have written yourself.

Defining the "10/10" Metric

A "10/10" isn't a vague feeling; it's a score across four specific pillars:

- Persona Alignment: Does the response use the specific vocabulary and tone defined in your brand guide? (e.g., If you are "Professional yet Wry," does it sound like a bank or a comedian?)

- Constraint Adherence: Did the model follow the negative constraints? (e.g., "Don't use the word 'delve'", "Keep it under 3 paragraphs").

- Groundedness: Is every claim made in the output directly supported by the RAG context provided?

- Utility: Does the response actually solve the user's problem, or is it just polite filler?

This dataset should be diverse. It should include the "Happy Path" (easy questions), the "Edge Cases" (tricky questions), and the "Adversarial Path" (users trying to break the vibe). As you encounter bugs in production, don't just fix them—add the failing input and the corrected output to this Gold Standard library.

The Model Upgrade Loop

When a new model like GPT-5 or Claude 4 drops, most founders just switch the API key and hope for the best. A Vibe Engineer runs their "Gold Standard" dataset through the new model and compares the results side-by-side. This is the difference between "Vibe Guessing" and "Vibe Engineering."

- The Tools: Use tools like Promptfoo, LangSmith, or even a simple side-by-side spreadsheet. The goal is to see exactly how the personality of the app changes across versions.

- The Question: "Does this new model improve the accuracy but kill the tone? Is it faster but less creative?"

- LLM-as-a-Judge: Use a stronger model (like Claude 3.5 Sonnet) to grade the outputs of your smaller, faster models based on your specific rubric.

By testing prompts systematically, you ensure that every iteration is a step forward, not a sideways slide into technical debt. You are building a history of what works, creating a proprietary "recipe book" for your brand voice.

Semantic Feedback: Listening to the "I Don't Know"

If you’ve built a RAG (Retrieval-Augmented Generation) system—where your AI looks up your own documents to answer questions—the most valuable data point you have is what the AI couldn't find. In the old world, a 404 error was a failure. In the AI world, a "Not Found" is a roadmap.

Turning Silence into Signal

Every time your AI says, "I'm sorry, I don't have information on that," or gives a vague, hallucinated answer because it lacked context, that is a Semantic Gap. It is a direct signal from your market telling you exactly what documentation you are missing.

Traditional founders ignore these logs because they are messy. Vibe Engineers treat them as a "Content Backlog" that dictates their product roadmap.

The Takeaway: In an AI-first startup, "updating the app" often just means "teaching the brain." This requires zero coding and can be done during your morning coffee. By identifying what people want to know vs. what you’ve told the AI, you are essentially letting the users write your product manual for you.

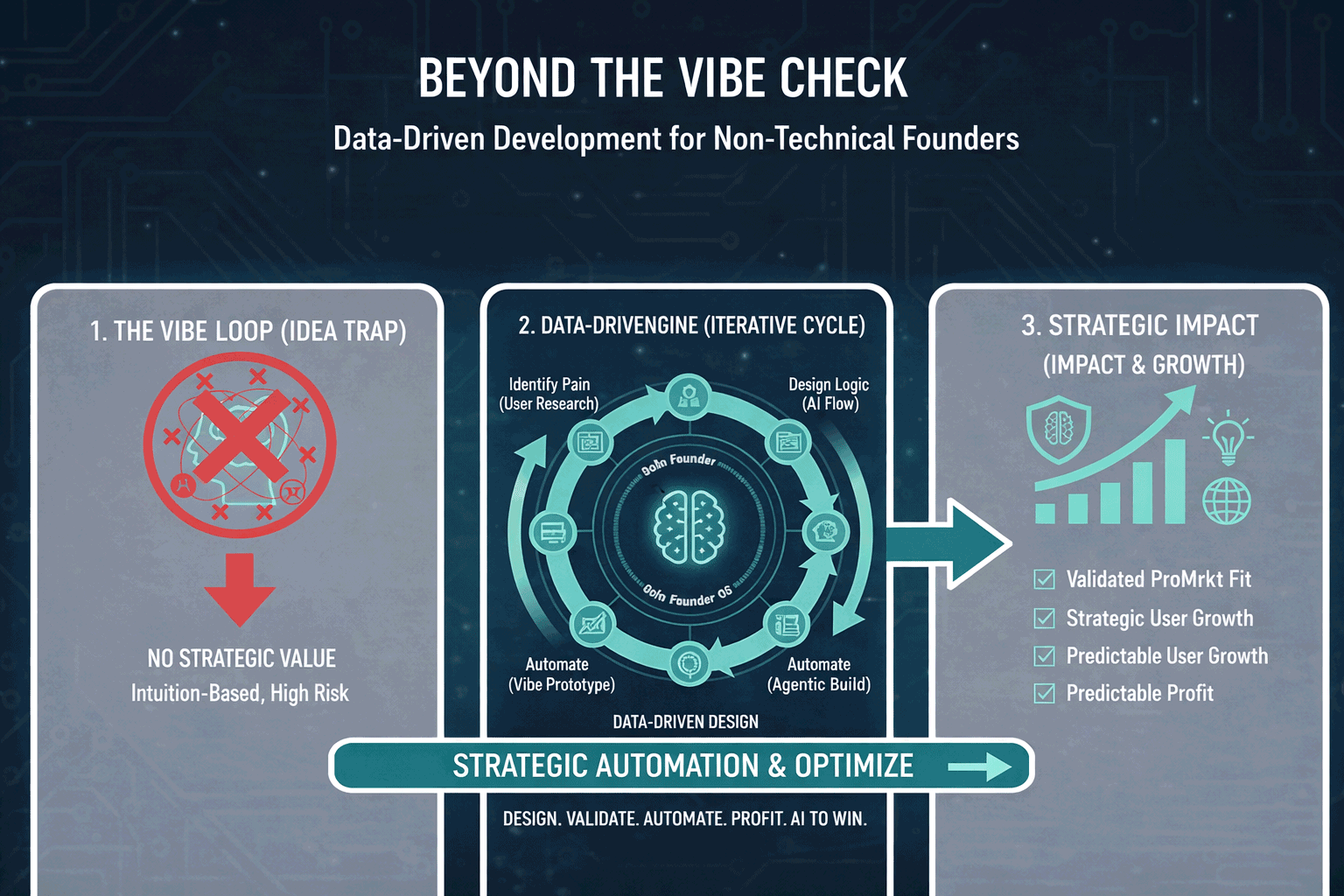

The Human-in-the-Loop (HITL) Engine

We are still in the early days of autonomous AI. For now, the most successful products are those that augment human intelligence rather than trying to replace it entirely. You need to build a "steering wheel" for your AI.

The Feedback UI

Your app should have built-in mechanisms for users to give high-fidelity feedback. A simple "Thumbs Up/Down" is a start, but it's too low-resolution. It tells you that a response was bad, but not why.

- "Correct this answer" button: Allow power users to edit an AI response. When a user corrects an AI output, that corrected pair should automatically be sent to your "Candidate Dataset."

- Specific Labels: Give users a choice: "Too wordy," "Factually incorrect," or "Wrong tone." This structured data is 100x more valuable for fine-tuning than a simple downvote.

- The "Magic" Loop: You are essentially tricking your users into being your QA (Quality Assurance) team. They pay you to help you train your model.

Managing the Negative Feedback Loop

Negative feedback isn't just an ego hit; it's your most expensive and valuable research. When a user hits "dislike," don't just log it—trigger a Diagnostic Flow.

Use a "Critic Model" (a separate LLM call) to analyze the disliked interaction. Ask the Critic: "Why did the user hate this? Was it a hallucination, a prompt failure, or a RAG retrieval error?" If the Critic identifies a clear failure, flag it for immediate manual review. By automating the triage of your failures, you can fix 80% of your bugs before you finish your morning coffee.

The "Expert Review" Cadence

As the founder, you should spend 30 minutes a day "grading" AI responses. This is the modern version of "walking the shop floor."

- The 5/10 vs. 10/10: Tag responses that were technically correct but lacked the "vibe."

- The "Aha!" Moment: Look for instances where the AI surprised you with a clever connection. How can you bake that specific logic back into the system prompt?

By labeling your own data, you are creating a proprietary asset. If you ever decide to fine-tune a custom model (like Llama 3 or a specialized Mistral variant), this labeled data is what will make your startup worth millions. Generic wrappers are worth zero because they have zero data gravity. You are building gravity.

Graduation: The Structured Engineering Brief

The "Vibe Engineer" phase is meant to be a period of hyper-growth and discovery. It’s about moving fast and breaking things. But eventually, success brings complexity. You will reach a point where you need "Real Engineering" to handle the scale.

The Hand-off Dossier

Instead of hiring an expensive developer and saying "build me an AI for real estate," you will hand your first hire a Technical Brief that includes:

An engineer who is handed a "Vibe Dossier" is 10x more productive than an engineer handed a blank slate. You’ve already done the hard work of "Product Discovery." You’ve already proven people will pay for the vibe. They just need to build the "Scale."

The Vibe Engineer’s "Moat"

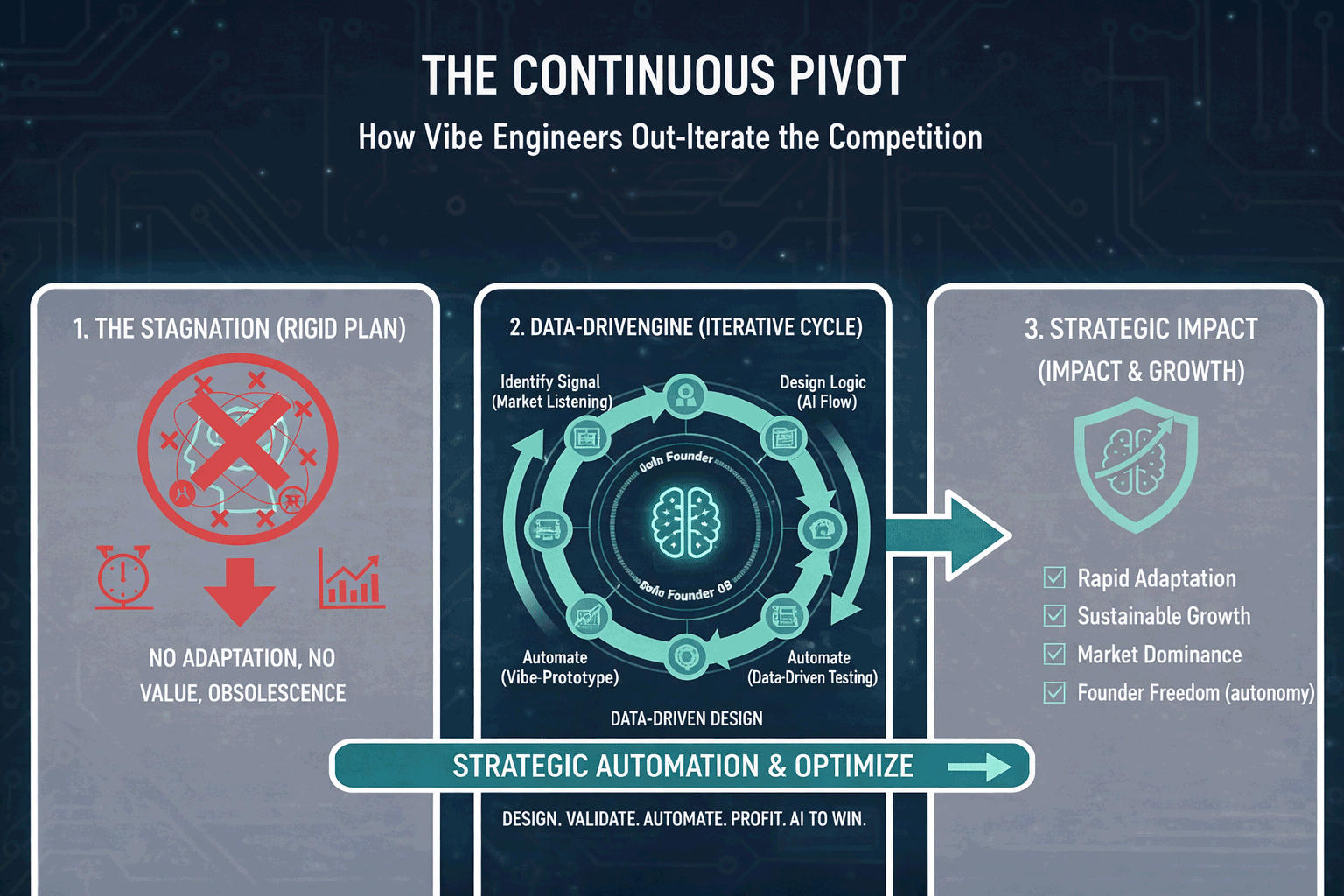

We often hear that "AI has no moats." This is only true for people who stop at the "Build" phase. If you just build a wrapper, you are a commodity. If you build a Learning System, you are a titan.

Your moat is the Flywheel of Learning:

- You Build a system that is easy to change (Post 1).

- You Measure exactly where the vibe is breaking (Post 2).

- You Learn by updating prompts and knowledge bases in real-time.

- Which leads to a better Build... and the cycle repeats.

While your competitor is talking to a dev agency about a feature request that will take three weeks to implement, you’ve already updated your RAG system, tested the new prompt against your Gold Standard, and shipped the fix to Vercel. In the AI era, speed is the only moat.

Conclusion: The Era of the Vibe Architect

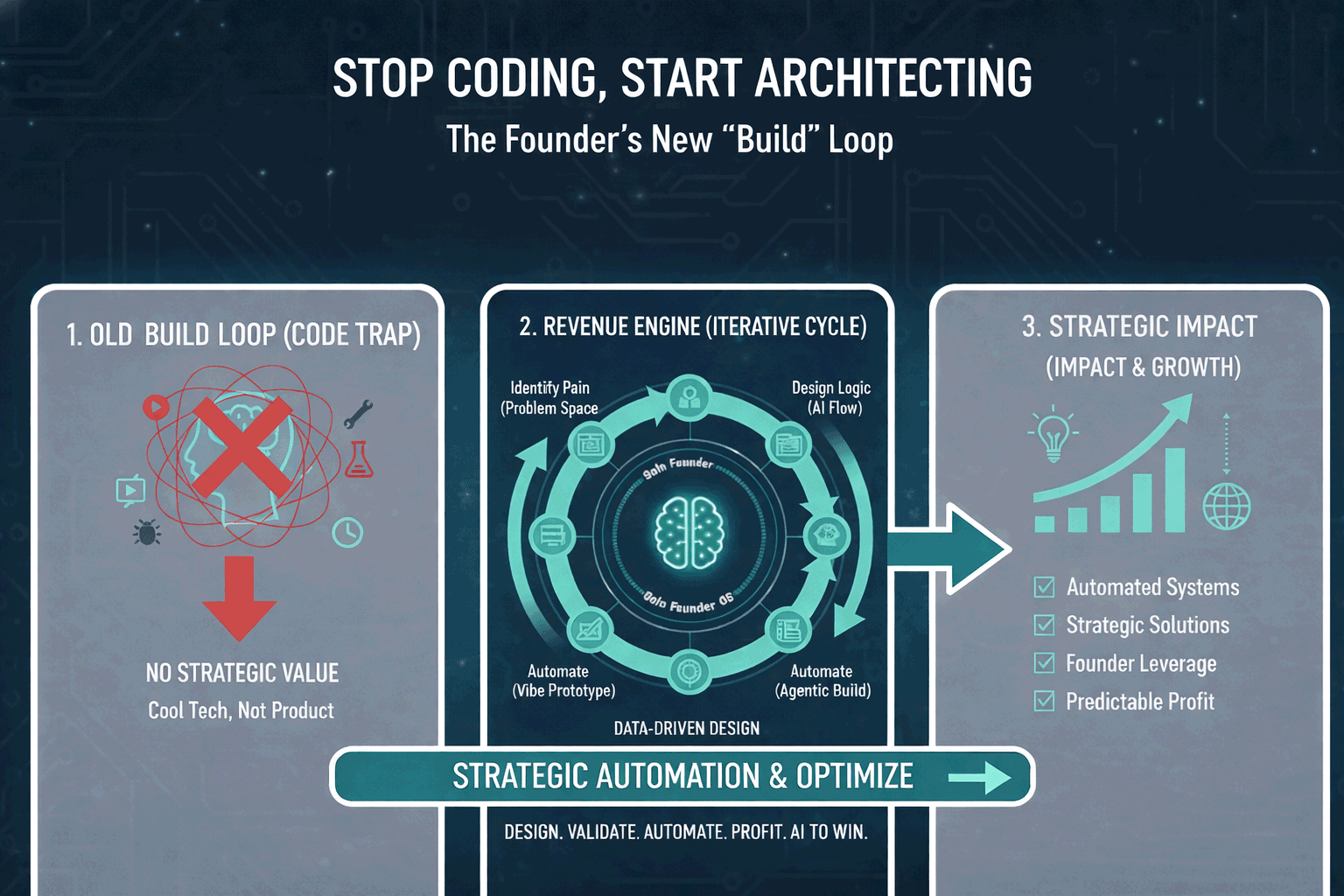

The "Vibe Engineer’s Playbook" isn't a guide on how to be a "lite" version of a developer. It's a guide on how to be a Heavy version of a Founder.

In the old world, the founder was the "Idea Person" who was often disconnected from the technical reality. In the new world, the Vibe Engineer is the Chief Systems Architect. You understand the pipes, you control the brain, and you own the data. You aren't just managing people; you are managing intelligence.

The old world of the disconnected executive is dead. In its place is the Vibe Architect—the founder who can build, measure, and learn at the speed of thought. You have moved from:

- "I hope this works" to "I know why this works."

- "I need to hire someone to fix this" to "I have the tools to iterate this myself."

- "I have a product" to "I have a learning system."

The Vibe Engineering journey is a loop. Every day, you wake up, look at your PostHog dashboards, look at your Sentry errors, and ask: "How can I improve the vibe today?" If you can do that 1% better every day, you will be unstoppable.

This concludes The Vibe Engineer’s Playbook blog series. The tools are ready, the models are waiting, and the barrier to entry has never been lower. Now, stop reading and start architecting.

No comments yet

Be the first to share your thoughts on this article!